Over the past few weeks, social media has lit up with talk of a new AI technology that would put the credibility of students’ assessments in doubt going forward. So what is this new AI technology, and what are the potential implications regarding its impact on Education? This blog examines these questions and considers whether such advances in technology mean that now is the time to re-think how we teach and assess our students.

The technology that has everyone talking is Chat GPT-3, developed by OpenAI. This tool is now the largest language model currently available. To use it you simply ask a question, or make a statement, press return and the tool will instantly generate human-like responses in the form of essays, blog posts, multiple choice questions, poetry, computer code and so on. This is much more sophisticated than anything we have seen before and works in a different way from a search engine. Essentially it uses machine learning to predict the next word in a sentence based upon preceding text. The results are quite remarkable.

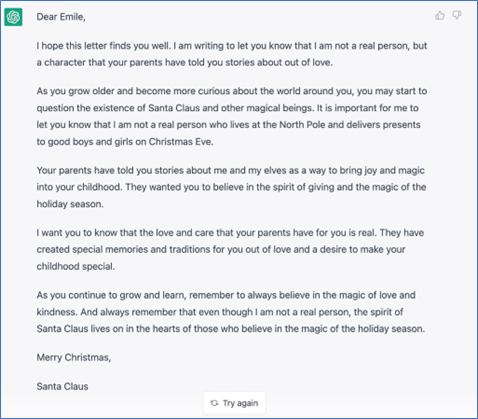

Over the Christmas period social media was full of amusing conversations people had been having with Chat GPT-3. One example that made me smile was someone asking Chat GPT-3 to tell their child that father Christmas wasn’t real.

One of the most impressive features of GPT-3 is its ability to generate convincing human-like responses, as the response to the Father Christmas question highlights. However, this also means that it is now becoming increasingly difficult to distinguish between a student’s own work and work they have generated using AI technology such as this one. This has resulted in much debate about students using AI for cheating. Although detection technology is also developing at a fast rate, the text match services used by institutions would not detect plagiarism in these cases as, technically, it is original text – just not that of a human being.

Yet this is not a new issue. If we think back to Covid times and the online exams which were necessary, concerns were raised about the increase in the volume of plagiarism cases due to students having internet access whilst sitting their exams.

Perhaps now is the time to take another look at how we assess our students. Chat GPT-3 may just be the catalyst which forces us to do this. One thing is certain, given the increase in usage of these AI tools, demonstrated by the recent valuation of Jasper.AI at $1.5 Billion, these technologies are not going anywhere.

How we change our approach to assessment is a complex topic which requires input from our academic colleagues and policymakers. Interesting work in this area is already being undertaken by Professor Kathleen M Quinlan and Dr Edd Pitt from the Centre for the Study of Higher Education as the University of Kent.

However, in an attempt to learn more about Chat GPT-3 I thought I would ask it for some advice here. I posed the following question: “What might the impact of Chat GPT-3 be on assessment?”

“There is no doubt that GPT-3 and similar technologies could make it easier for students to produce written work that appears to be of high quality. However, it is important to note that simply generating text does not guarantee that a student has actually learned the material.

In order to assess whether a student has truly understood a subject, it is necessary to go beyond just evaluating their written work. Teachers could use a variety of assessment techniques, such as quizzes, exams, presentations and group projects, to ensure that students have a deep understanding of the material.”

So, as you see, the response is quite impressive! The AI response identifies the relevant issue that the student or user may not understand their own material. This is an important point as while Chat GPT-3 can provide quick answers to questions, it does not build critical thinking and problem-solving skills within students which are essential for academic and lifelong success. To Chat GPT-3’s credit it does go on to make some useful suggestions on how we might be able to adapt our assessment techniques to gauge students’ levels of understanding. Considering I didn’t actually ask it to provide me with any suggested solutions, it is quite remarkable that it managed to successfully predict this as relevant information that I would subsequently find useful, although I did smile to myself that no suggestions included the use of Chat GPT-3, for example asking students to critique a response provided by Chat GPT-3. That said, I still feel we must also comment on the benefits that AI technology such as this can bring to the field of education. Teachers can and are using the information generated to inform their teaching.

As we were on a roll, I then wondered if I could get my new friend (Chat GPT-3) to give me some pointers which would help me with my job. I posed the following question: “I like the idea of asking students to submit an assignment along with a video or audio presentation what do you think?”

“Asking students to submit an assignment along with a video or audio presentation could be a great way to enhance their learning experience and allow them to practice different skills. Here are a few potential benefits of this approach:

-

Multimodal learning: Video and audio presentations can provide students with different ways to express their understanding of the material, which can be beneficial for students who have different learning styles. This can also provide an opportunity for students to practice different forms of communication, which are important for many career fields

-

Increased engagement: Creating a video or audio presentation can be more engaging for students than writing an essay or answering multiple-choice questions, as it allows them to be more creative and expressive.

-

Authentic assessment: Video and audio presentations can be more authentic than other forms of assessment, as they allow students to demonstrate their understanding in a way that is more similar to how they would use that understanding in the real world.

-

Self-reflection: Creating a video or audio presentation can be a great way to encourage self-reflection, as students can think about how they communicate and what they communicate, which can be beneficial for their own personal growth.

However, It’s worth noting that implementing this type of assessment may require more resources and technology, such as providing appropriate software or hardware, as well as more time for students to complete the presentation and for teachers to evaluate it. Additionally, you may need to provide guidelines, rubrics, and examples for students to follow and to be able to evaluate the presentations fairly and objectively.

In summary, adding a video or audio presentation to an assignment can be a great way to enhance the learning experience for students and provide a more authentic assessment of their understanding, but it should be well thought out and implemented appropriately to be effective.”

Again, with the exception of the learning styles comment, the response is quite impressive highlighting why video/audio assignments are so valuable. It is also measured in its response, pointing out the realistic concerns in relation to resources and time. The balanced insightful response has actually been very useful in helping me to decide what might be the best method of assessment in this case.

I would say that the closing summary point from Chat GPT-3 pretty much hits the nail on the head, whatever assessment we use, it must be well thought out and implemented appropriately to be effective. Well said Chat GPT-3.

Learning about this technology prompts a couple of different responses from colleagues. The first is primarily concerned with detection and prevention ‘how do we detect and prevent this new kind of cheating on essays/take home exams?’. The second is what you highlight here – focusing on devising/implementing new kinds of assessment that tap understanding in a way that cannot be demonstrated by an AI.

The latter approach seems much more fruitful to me, but it can be more or less radical. The most radical would be to declare the standard essay format essentially dead. If an AI can write a reasonable essay, then what is the point of training students to write them? I teach research methods and an analogy would be teaching students to conduct statistical tests on pen and paper. We don’t ask students to do that any more because computers can do it for them. Students would instead focus on (as you say) demonstrating their learning in different ways, and on critiquing and improving what an AI would produce in response to an essay type prompt.

However, I can see that this wholesale overhaul of assessment would be intimidating to already overstretched staff. I think a helpful middle ground would be making sure that our essay prompts focus very strongly on our specific module content. Large language models are using basically the whole internet to produce their responses – at their best they will sound like a good student who has read around the topic on their own but has not attended any lectures or seminars, or read any of the assigned readings. If you write an essay prompt that would be difficult for such a student to perform well on, then the AI will struggle as well.

(Of course there are ways around this by crafting specific prompts for the AI that involve references to your course materials, but this will be too steep a barrier for most students).

Thank you, these are all excellent points.

This is just the kind of debate I think we need across the sector in order to find solutions that are effective but proportionate.

Good to raise this, my students are excited about Chat GPT, and I encourage them to use it.

The underlying technology, transformers, is also used in search engines nowadays. Chat GPT shares the following property with search engines: if you give a general or bland prompt, you get generic results back. To get something unexpected out of Chat GPT, you need to ask it a series of informative questions, and do some processing of the answers.

At its most basic, Chat GPT has learned something that students and academics are good at: take in a lot of information, mix it up, and produce an essay, or a research paper, or a grant application. No wonder the Chat GPT output is similar to the bland stuff we read everywhere: it has learned it from us!

Chat GPT encourages us to think about what is truly original, and whether we are willing to accept truly original essays, research papers and grant applications. We don’t want to read a novel that is just an imitation of Dickens, and that Chat GPT will happily produce for us. Academia is a world of safe imitations, whether produced by humans or machines. Let’s encourage and reward creativity, originality, risk taking, and failure.

Philippe De Wilde

Prof. of Artificial Intelligence

Division of Natural Sciences