Srivas Chennu, University of Kent

DeepMind has recently announced a fresh collaborative partnership with the UK’s health service, with plans for the artificial intelligence firm to develop machine learning technology to research breast cancer.

DeepMind, a Google subsidiary, is perhaps best known for successfully building AI that is now better than humans at the ancient game of Go. But in recent months – when attempting to apply this tech to serious healthcare issues – it has been on the sidelines of a data breach storm.

In July, DeepMind’s collaboration with London’s Royal Free hospital led to the NHS trust violating the UK’s data protection laws.

The Information Commissioner’s Office (ICO) found that Royal Free’s decision to share 1.6m personally identifiable patient records with DeepMind for the development of Streams – an automated kidney injury detection software – was “legally inappropriate”. DeepMind wasn’t directly criticised by the ICO.

Personal records included patients’ HIV-positive status, as well as details of drug overdoses and abortions. Royal Free’s breach generated considerable media attention at the time, and it means that DeepMind’s latest partnership with an NHS trust will be scrutinised carefully.

It will be working with Cancer Research UK, Imperial College London and the Royal Surrey NHS trust to apply machine learning to mammography screening for breast cancer. This is a laudable aim, and one to be taken very seriously, given DeepMind’s track record. London-based DeepMind emerged from academic research, assisted by Google’s deep pockets. It is now owned by Google’s parent company Alphabet.

Its success has arisen from recruiting some of the best machine learning and AI scientists, organising them into goal-driven teams, and freeing them up from having to teach or apply for funding.

Mind reader

DeepMind appears to have learned from the Royal Free data breach, having “reflected” on its own actions when it was signed on to work with the trust. It said that the breast cancer dataset it will receive from Royal Surrey is “de-identified”, which should mean that patients’ personal identities won’t be shared.

Another key difference is that the Royal Surrey dataset was explicitly collected for research – indicating that participants gave consent for their data to be shared in this way. DeepMind has also been upfront about its approach to data access, management and security. It has appointed independent reviewers and verifiable data audits, in the hope of building trust and confidence.

Given DeepMind’s continued collaboration with the NHS on a range of research, citizens are rightly concerned about how private corporations might exploit the data they have willingly shared for publicly funded work.

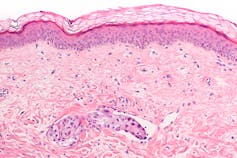

Few details about the Royal Surrey research project – which is in the early stages of development – have been released, but it’s likely that DeepMind will focus on applying deep neural networks for scanning mammogram images to automatically identify signatures of cancerous tissue. This approach would be similar to its Moorfields Eye Hospital project, where DeepMind is building automated machine learning models that can predict macular degeneration and blindness from retinal scans.

DeepMind is probably also exploring the possibility of incorporating novel deep reinforcement learning algorithms to train the machine learning models. The algorithms would then tap into insights from empirical neuroscience research about how the human brain learns from reward and punishment.

Reinforcement learning – which differs from more conventional supervised and unsupervised learning methods used in machine learning – is the technique that enabled DeepMind to train agents that learn to play Go and many other games better than humans.

Apart from these algorithmic advances, DeepMind might find, as it already has with the Streams trial, that there are many technological tweaks that can improve how doctors treat patients, without any need for machine learning at all.

Human nature

From my own experience in applying data analytics to medical diagnostics in neurology, I know that – even if things go well for DeepMind and it manages to build a machine learning model that is excellent at detecting the early signs of breast cancer – it might well face a more practical problem in its application to the real world: interpretability.

Shutterstock

The practice of medicine today relies on trust between two humans: a patient and a doctor. The doctor judges the best course of treatment for a patient based on their individual clinical history, weighing up the relative pros and cons of the different options available. The patient implicitly trusts the doctor’s expertise.

But will patients or doctors trust a machine if it produced the same recommendation, based on an algorithm? While the use of machine learning has become commonplace in many contexts behind the scenes, its application in healthcare is fraught with the challenge of trust.

If a doctor or patient fails to understand and communicate the rationale behind a recommendation, it might be very difficult to convince either to adopt it. And no machine learning algorithm is likely to be perfect. Both false positives and negatives are of great consequence in the healthcare context.

In the world of policing, AI has muddily kicked up hidden biases that can creep into machine learning models, reflecting the unfairness embedded in our world. Machines that inherit our prejudices might make for unpopular medical aids.

AI scientists are working on the problems of bias and interpretability, while also working with clinicians to design artificial intelligence that is more transparent about uncertainty.

![]() Beyond the technological advances in AI for improving human health, both ethics and interpretation will play central roles in its acceptance.

Beyond the technological advances in AI for improving human health, both ethics and interpretation will play central roles in its acceptance.

Srivas Chennu, Lecturer in eHealth, University of Kent

This article was originally published on The Conversation. Read the original article.