Brendan Clarke, UCL

b.clarke@ucl.ac.uk

I’m due to give a departmental seminar at HPS in Cambridge on the 27th November on ignorance. The idea – which, as usual, I’m still fleshing out – is to explore a question about evidence in medicine using a mixture of historical and philosophical work that deals, loosely, with questions about ignorance. I’ll get to an outline of this integrated HPS work in a moment, once I’ve outlined the problem which was my starting point for this research.

This is likely to be a familiar query to philosophers (and scientific practitioners): what’s the difference between absence of evidence andevidence of absence? This is a rather well-worn query, and the usual answer is that there is some kind of important difference between them (see Sober 2009; Strevens 2009 for examples). Rather than attempting to add to the stock of answers to this general this problem, though, I wanted to explore a more constrained version that we might encounter when thinking about EBM.

Picture two scenarios where we are looking for evidence to support a claim that a particular drug is an effective treatment for a particular disease. Should we think differently about cases:

a) where there is no evidence to be found because no research has been conducted

and

b) where there is no evidence to be found, despite much research having been conducted

My intuition is strongly that we should think differently about these cases when it comes to considering what we should believe about the possible efficacy of our drug. In case b), we should seriously doubt whether the drug is effective, whereas in case a) we just don’t know whether it is or not. In general, I think that the story about how we happen to not know about a particular hypothesis should constrain our beliefs about that hypothesis, and one important part of this story concerns an account of how hard researchers have looked for it.

However, trying to apply this intuition to practice is not very easy, largely because most unsuccessful trials are not published. Okay, so clinical trials registries offer a possible solution to this difficulty, but this assumes that data can be collected comprehensively. Entirely anecdotally, I’ve had several experiences of finding seriously incomplete data in apparently compulsory clinical trials registries, and plan to discuss this in a future blog post. But for whatever reason, it’s often not possible to figure out just how much unpublished research there has been in particular cases.

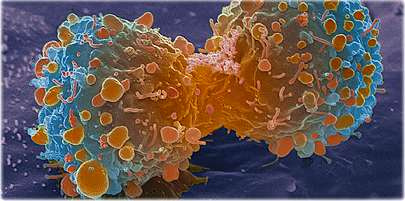

This is one reason that I’ve started thinking more historically about this issue. I’ve already talked on this blog about the case of cervical cancer. To briefly recap, cervical cancer is now thought to be caused by infection with human papillomavirus (HPV). However, for the two decades between about 1965 and 1984, cervical cancer was thought to be caused by infection with an unrelated virus known as herpes simplex virus (HSV). From the first suggestion that HSV might cause cervical cancer, its causal role was thought to be highly plausible, largely because of the roles played by herpes viruses in causing cancers in animals. By analogy with these animal tumours, an extensive research programme developed around HSV that was predicated on investigating its (possible) aetiological role in cervical cancer.

What’s interesting here is that this unsuccessful research on HSV produced many publications. My informal estimate on PubMed turned up something like 400 publications on the subject, bounded by the first HSV detection in cervical cancer tissue (Naib et al 1966) to the pair of reports of the Prague study that showed no correlation between cervical cancer and HSV (Vonka et al 1984a and b). Strictly, Prague was not the end, and perhaps because of the length of journal publication cycles, articles supporting the herpes hypothesis continued to appear after this devastatingly negative report was published (see Aurelian 1984, and hope fervently that history isn’t so unkind to us).

Something that is notable about all these publications is that they feature precious few results, at least to the aetiological question regarding HSV and cervical cancer. An excellent example are the many papers found in a special issue of the journal Cancer Research from 1973, which contains the proceedings of a Symposium, sponsored by the American Cancer Society, on Herpesvirus and Cervical Cancer which took place at the Key Biscayne Hotel, Key Biscayne, Florida. Instead, most of these papers are about methods and models (see e.g. Sever 1973 for a typical example, and Klein 1973 and Goodheart 1973 for overviews). Very few of them directly implicate HSV in the genesis of cancer of the cervix. Yet despite this, HSV remained by far the most plausible cause of cervical cancer to cancer-virus researchers at the time.

In the seminar paper next week, my aim is to try and understand this persistent absence of evidence in the context of recent research into agnotology – culturally induced ignorance or doubt. However, the emphasis in much of this work is firmly on ignorance as “something that is made, maintained, and manipulated” (Proctor and Schiebinger 2008:8). Typical cases discussed in the agnotology literature – such as military classification of documents, or the doubt deliberately cast on the causal link between smoking and lung cancer – are characterised by the deliberate obscuring of knowledge by individuals or organisations. This is not so for cervical cancer, in which a persistent absence of knowledge seems to have been ignored, rather than manufactured. Here, I therefore explore a epistemic thesis concerning agnotology: “when should persistent absence of evidence make us think sceptically about a particular hypothesis?”

One historian’s tid-bit: the Key Biscayne Hotel, Key Biscayne, Florida, where the cervical cancer symposium was held, was just a stone’s throw from the “Florida White House“, the waterfront compound where Richard Nixon spent a good deal of time during the December of 1972 as the Watergate scandal began to build. What a time and place to be dealing with secrets, and their dissolution.

Richard M. Nixon, ca. 1935 – 1982 – NARA – 530679” – U.S. National Archives and Records Administration. Licensed under Public domain via Wikimedia Commons

References

Aurelian L. 1984. Herpes simplex virus type 2 and cervical cancer. Clinics in Dermatology. 2(2):90-9.

Goodheart, CR. 1973. Summary of informal discussion on general aspects of herpesviruses. Cancer Research. 33(6): 1417-1418.

Klein, G. 1973. Summary of Papers Delivered at the Conference on Herpesvirus and Cervical Cancer (Key Biscayne, Florida). Cancer Research. 33(6): 1557–63.

McIntyre, P. 2005. Finding the viral link: the story of Harald zur Hausen, Cancer World. July-August 2005: 32-37.

Naib, ZM., Nahmias, AJ. and Josey, WE. 1966. Cytology and Histopathology of Cervical Herpes Simplex Infection. Cancer. 19(7): 1026–31.

Proctor, RN. and Schiebinger, L. 2008. Agnotology: the making and unmaking of ignorance. Stanford University Press.

Sever, JL. 1973. Herpesvirus and Cervical Cancer Studies in Experimental Animals. Cancer Research. 33(6): 1509-10.

Sober, E. 2009. Absence of evidence and evidence of absence: Evidential transitivity in connection with fossils, fishing, fine-tuning, and firing squads. Philosophical Studies. Philosophical studies. 143(1): 63-90.

Strevens, M. 2009. Objective evidence and absence: comment on Sober. Philosophical studies. 143(1): 91-100.

Vonka V, Kanka J, Jelínek J, Subrt I, Suchánek A, Havránková A, Váchal M, Hirsch I, Domorázková E, Závadová H, et al. Prospective study on the relationship between cervical neoplasia and herpes simplex type-2 virus. I. Epidemiological characteristics. International Journal of Cancer.33(1):49-60.

Vonka V, Kanka J, Hirsch I, Závadová H, Krcmár M, Suchánková A, Rezácová D, Broucek J, Press M, Domorázková E, et al. 1984. Prospective study on the relationship between cervical neoplasia and herpes simplex type-2 virus. II. Herpes simplex type-2 antibody presence in sera taken at enrollment. International Journal of Cancer. 33(1):61-6.

Götzsche, Peter. 2013.

Götzsche, Peter. 2013.  Carpenter, Daniel. 2014.

Carpenter, Daniel. 2014.